The phrase "with great power comes great responsibility" is more relevant than ever for the field of Artificial Intelligence. As the capabilities of AI (and in particular Generative AI) continue to expand, the scope for negative repercussions of AI integration grow with it.

AxisOps is involved in several AI and Machine Learning projects. There's a constant demand to deliver fantastic products whilst balancing the risks associated with AI. We have therefore created a framework for AI-based projects that aligns with our core values:

- Ingenuity: Enabling us to build brilliant AI-driven solutions

- Diligence: Using robust machine learning methodologies, from initial exploration to final deployment

- Accountability: Prioritising interpretable AI solutions for maximum transparency

- Social Responsibility: Championing Ethical AI principles

This article explores our AI philosophy at a high level. It encapsulates our consultancy-driven approach, Machine Learning best practices, and Ethical AI considerations.

If you don't know the difference between "AI", "Generative AI" and "Machine Learning", then we highly recommend checking out this previous article.

Table of Contents

Understanding the problem

It is all too easy to get swept up by the current wave of hype and try to solve every new problem with AI. But it is always valuable to take a step back and evaluate what the biggest pain points and time drains are in your business, and use this as the basis for where AI could deliver the greatest gains. Are there repetitive tasks that drain your resources? Is there untapped potential in your datasets? Once the key areas for improvement have been identified, it is possible to start bringing your AI vision to life.

Laying the foundations using data

Exploring the data

Without data, revolutionary AI technologies like ChatGPT and DALL-E wouldn't exist. In order for machines to learn, we firstly need to teach the machine with the highest quality data we have available. How this data is obtained and manipulated is therefore critical to the performance of the eventual AI solution.

As discussed in our previous AI article, choosing the right AI "brain" is an important step in the engineering process. To make the right decision, one must first have a robust understanding of the data itself, including:

- How the data can be obtained

- The core parts of the data that are relevant to the use case

- How the data can be combined and manipulated to enhance relevant "signals" in the data

- What the underlying structures in the data are

Obtaining this core information is critical to the success of any Machine Learning solution.

Where is your data going?

While on the topic of data, who are you comfortable sharing your business' sensitive information with?

All Machine Learning technologies require training data to calibrate their behaviour, and all models require input data to ground their predictions. This training and input data may contain sensitive information like names, addresses, contracts, medical information or intellectual property. However, the majority of AI infrastructure is hosted by big tech companies like Amazon, Google, Meta and Microsoft, meaning your sensitive data has to be sent to these data centers across the planet for processing. It's at this point that control of your sensitive data is essentially forfeited. Not only are you at risk of losing access to your data but the service providers may choose to use your data for training their own AI models, potentially resulting in your data being exposed when their AI is deployed.

One option to reduce the risk of sensitive data being exposed is to implement a "Sovereign AI" solution. This ensures complete data privacy, placing you in direct control of all areas where you sensitive data is stored and processed. At AxisOps, we operate our own automation and AI infrastructure, giving you the option to deploy your services in a fully managed UK-based sovereign environment. We take data privacy very seriously and have gone the extra mile by achieving ISO 27001 certification. We also have experience developing Sovereign AI solutions that you can host within your own office, meaning your sensitive data needn't ever leave your building.

Calibrating AI "brains"

Once the data has been adequately prepared, attention can be turned to building the AI.

Choosing the right AI solution

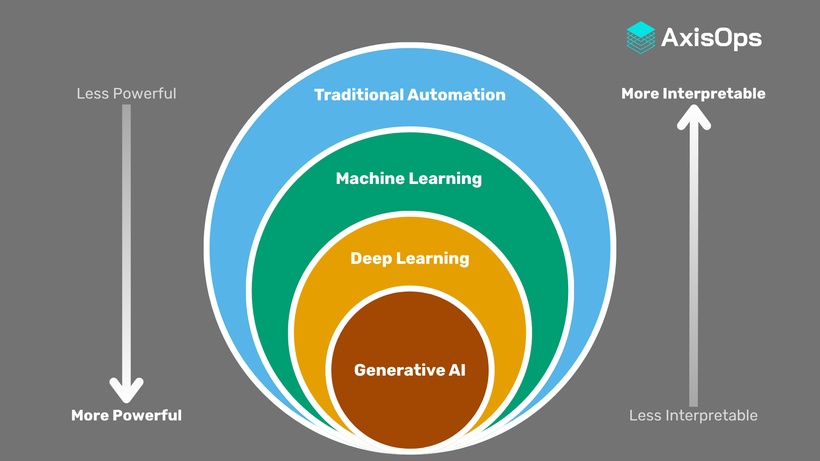

When developing bespoke Machine Learning solutions, the aim is always to represent the underlying structure of the data as accurately as possible. That means overcoming problems such as unreliable data, under-fitting and over-fitting, as discussed in our previous AI article. To complicate matters further, AI models that are more powerful are typically less interpretable, meaning they may exhibit unexpected and unpredictable behaviour. This also means, if something goes wrong, it is more difficult to diagnose why the AI did what it did.

So, what is the most effective way to protect against the potential negative repercussions of AI? It's simple: don't use AI if it's not actually needed!

More specifically, if a problem can be solved effectively with traditional software engineering, which is transparent and carries fewer side effects, then that's the approach to take. With all the hype surrounding AI, it can be tempting to unnecessarily over-engineer a solution using AI. For most tasks, traditional automation methods remain the preferred and most robust solution.

When problems are too complicated to be solved by standard automation practices, then a Machine Learning approach becomes a valid option - leveraging the data to automatically arrive at a solution. Nevertheless, the emphasis should remain on choosing the simplest Machine Learning method for the problem. That might mean using standard forecasting and classification tools instead of reaching for fancy Neural Networks. By following this ethos, it becomes easier to diagnose and fix issues if and when they arise.

An effective analogy to this approach is the green hierarchy of "Reduce, Re-use, Recycle", whereby the first step is to reduce. Although it's good to recycle a plastic bottle that was never used, the environmental damage would have been greatly reduced if the redundant bottle was never made in the first place. Similarly, by using the simplest solution in the AI hierarchy, we can save ourselves a lot of trouble in the future.

A multi-pronged approach

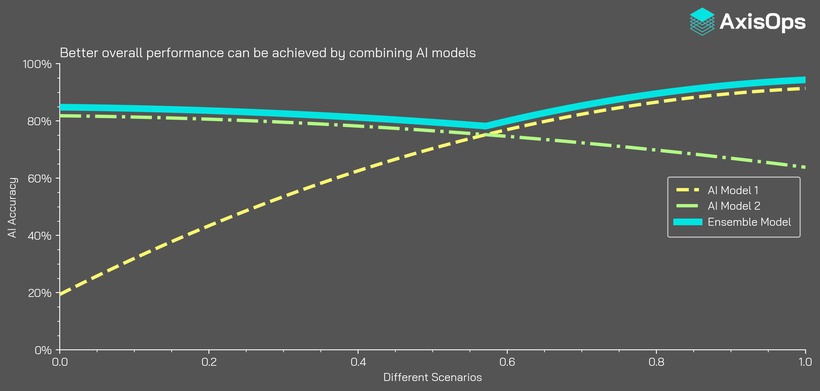

Instead of testing a single Machine Learning approach to solve a problem, it is always better to try a variety of technologies to find out what works best. Sometimes there is a clear winner, but it is often the case that similar performance is achieved with completely different approaches.

But what if there was a way of combining two AI models, each with an average accuracy of about 70%, to make a new AI model with an accuracy of about 80%? Despite sounding like fiction, this is in fact possible through the power of ensemble models.

Imagine you're great at maths but terrible at writing essays, and you meet someone with the opposite set of skills. If you team up, then you can now tackle a greater range of challenges. The same principle applies to ensemble models, which take the two or more AI models and figure out their strengths and weaknesses. The result is a new AI model that uses the best attributes of the original models. It really is possible to have the best of both worlds.

Enforcing AI bias

It is common for AI to exhibit a bias towards a certain outcome due to its structure and training data. These biases are often harmless but can occasionally cause controversial issues such as sexist or racist behaviour - in these cases, bias should be eliminated at all costs. However, there are other situations where adding bias can be beneficial.

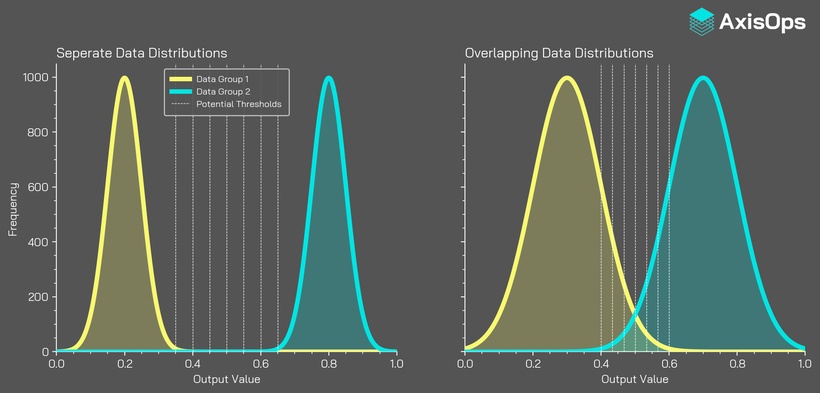

For classification problems, there is often a final calibration stage to determine at what point an AI function is triggered, and it can be helpful to add a bias to affect the outcome.

For example, say you have created an AI model to distinguish between pictures of cats and penguins. The AI takes an image as an input and gives a number between 0 and 1 as an output. A picture of a cat should return a value closer to 0, whereas a picture of a penguin should return a value close to 1.

Cats and penguins are very different, so it's unlikely there will be many images where a cat looks like a penguin or vice versa, meaning the data distributions for these groups will be separated (left plot in the image below). This gives us a wide range of thresholds that could be chosen as the dividing line between "cat" and "penguin". This means a large amount of bias could be added to the threshold (in either direction) and the high accuracy of the AI model wouldn't be affected.

The situation is very different if we were instead tasked with creating an AI model to distinguish between images of rats and mice. There would be many cases of rats looking like mice and vice versa. This would cause an overlapping of data distributions (right plot in the image above), meaning placement of the dividing line between "rat" and "mouse" will have greater consequences:

- If the threshold is exactly in the middle, we would get an equal amount of images classified incorrectly for both rats and mice

- If we nudge the threshold to the right, we may get all of the pictures of rats correct, but a huge amount of pictures of mice incorrect

- If we nudge the threshold to the left, we get the opposite effect

In these situations, it is critical that the potential consequences of AI "decisions" are discussed with key stakeholders, especially for safety-critical systems where (instead of rats and mice) you are trying to distinguish between standard and dangerous scenarios. When the consequences for missing a "dangerous" event will be severe, then the bias of the AI should be shifted to ensure these events are never missed, even if this means many "standard" events trigger false-positives.

Taming Generative AI

There is a greater demand than ever for leveraging the power of Generative AI to tackle tasks that would previously have been impossible to automate. However, as we discussed in a previous article, there is a high risk of AI hallucinations when using these technologies. In short, this risks the GenAI doing something unexpected, such as providing false or inaccurate information. As such, it's important to add layers of redundancy to mitigate the risk of negative consequences from hallucinations, such as:

- Pre-Processing: Where necessary, input data is normalised to a format that is optimal for the chosen GenAI tool

- Prompt Engineering: Use data to optimise prompts for higher accuracy

- Structured Outputs: Ensure that output data is in a consistent format that can be easily validated and ingested by subsequent data pipelines

- Validation: Perform checks on the GenAI outputs to catch common hallucinations

- Evidencing: Where possible, capture the original source of the information provided

- Reporting: Collate relevant information and provide notifications or reports for humans to review

Considerations for Ethical AI

"Ethical AI" concerns the design of Artificial Intelligence systems that are aligned with human values such as fairness, transparency and accountability. The scope of Ethical AI is broad, covering topics such as data privacy and AI bias, to concepts like weaponised AI and machine consciousness.

Ethical AI is a key cornerstone of AxisOps' AI philosophy, aligned with three of our core values: Diligence, Accountability and Social Responsibility. As previously highlighted, AxisOps takes the transparency and biases of its AI models extremely seriously, and adds layers of redundancy to ensure that clients are supplied with powerful, but robust, solutions.

How this AI philosophy powers AxisOps

AxisOps is consultancy-led, with a passion for transforming your big ideas into brilliant AI-driven solutions. Our expertise in Sovereign AI and our ISO 27001 certification places data security at the centre of everything we do. This, combined with a consistent and robust AI framework aligned with our core values, enables us to deliver powerful AI solutions to meet your exact needs.

Let's talk AI

Do you have big ideas for leveraging AI?

AxisOps are experts in the delivery of bespoke AI solutions, versatile Neural Networks and GenAI integration.

Start your AI journey