Have you ever used an AI tool and the response made no sense? Has AI ever lied to you? If so, then you have probably been a victim of AI "hallucinations". They are one of the biggest problem areas in Generative AI today, but what are they, and why do they occur?

In this article, we will be talking about "Machine Learning" concepts and how they relate to "Generative AI". If you don't know the difference between "AI", "Generative AI" and "Machine Learning" then we highly recommend checking out this previous article.

Table of Contents

What are hallucinations?

AI hallucinations are outputs from a Generative AI where something is incorrect or makes no sense. For example:

- A text-generation AI may tell you that the capital of France is not Paris

- An image-generation AI may draw humans with more than 2 hands

- A video-generation AI may portray motion that is unnatural or impossible.

We recommend checking out the AI Fails subreddit to explore the many ways that Generative AI gets things wrong.

Generally speaking, you can expect at least 1-in-100 GenAI prompts to result in a response that is incorrect. These hallucinations are annoying at best and dangerous at worst, with long-term repercussions that are not yet fully understood.

So, if Generative AI gets things wrong so often, can't we just fix them to prevent hallucinations happening? Unfortunately it's not that simple. Hallucinations can happen in multiple different ways, with some issues easier to fix than others. For the rest of this article, we will draw comparisons to text-generation AI like ChatGPT and DeepSeek, but similar concepts apply to other GenAI technologies.

Types of hallucinations

There are various different mechanisms by which a hallucination could occur. In this article, we will look at the following:

- Three ways in which unreliable data causes issues

- Choosing the right AI "brain"

- Issues specific to text-generation AI

Part 1: Hallucinations due to unreliable data

You could have the most sophisticated AI framework in the world, but if the data used to train it is insufficient, incomplete or incorrect then the result won't be fit for purpose and the AI will make mistakes.

Part 1A: When AI ingests lies

If you are told your whole life that the world is flat, how would you approach a geography exam? If you had one parent that was religious and another that was atheist, would you be more likely to hold a belief somewhere in the middle? Humans form beliefs and decisions based on data they have been exposed to, and AI is no different.

One of the famous concepts of Machine Learning is "Garbage in, Garbage out". In other words, an AI will only ever be as good as the data used to train it.

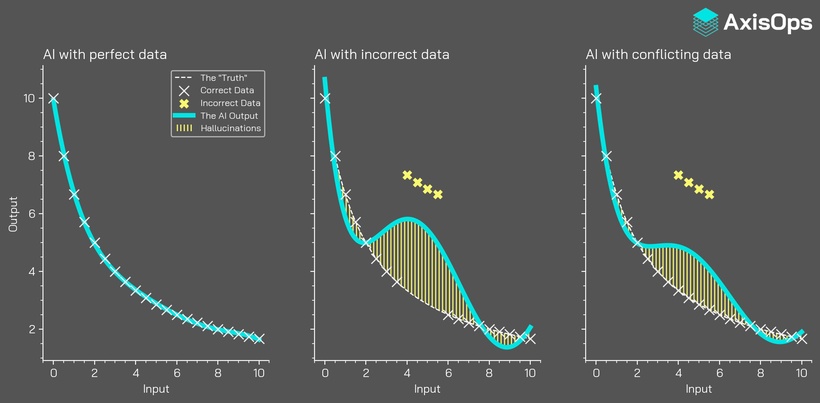

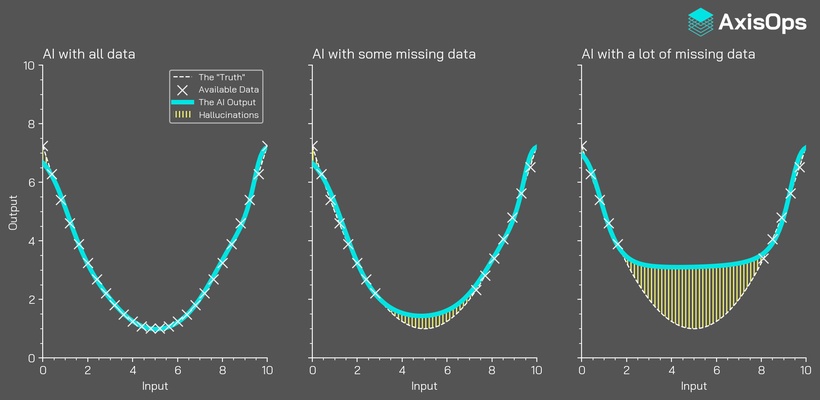

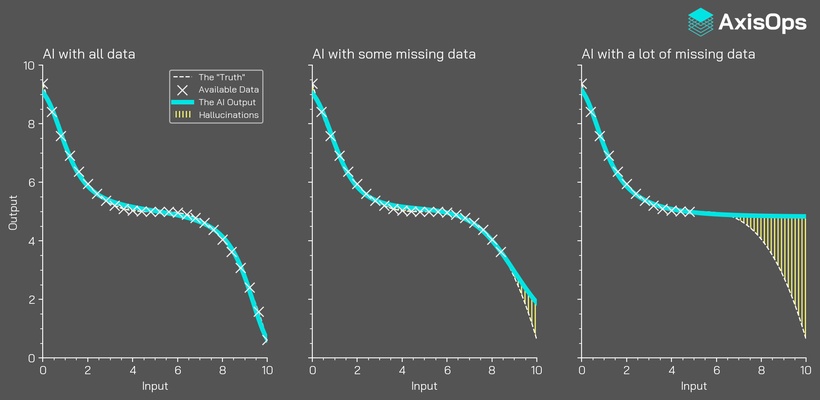

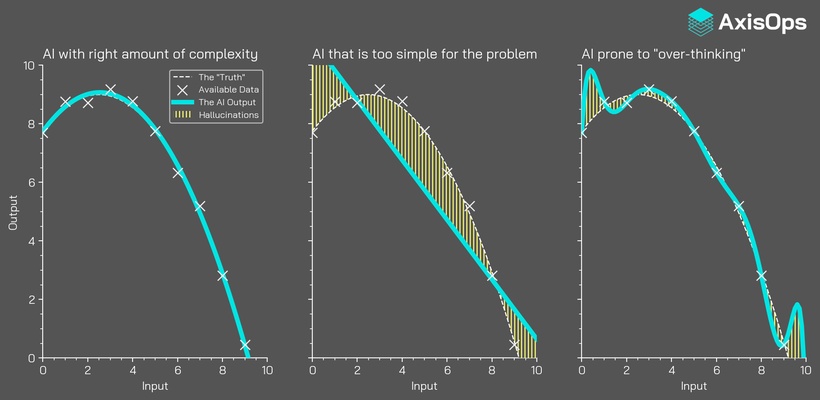

The image below demonstrates that even a small amount of anomalous data can cause big changes in the AI's behaviour. Not only do the hallucinations occur in regions where data is bad, but ripple effects can occur whereby AI results are inaccurate even in regions where the data is of good quality.

For Generative AI like ChatGPT, the majority of the data is collected from online sources, but the internet is full of mistakes, contradictions and deceit:

- Statements are often made without being fact-checked

- Opinion articles are often presented as fact

- Fictional content (like novels and film scripts) is just as prominent as factual content

- Fake news articles are more prominent than ever

In such a world, it's not surprising that GenAI produces unexpected results. The developers of Generative AI arguably have a near-impossible job. The datasets required to train GenAI are necessarily vast and would take more than a lifetime to analyse, so mistakes and biases within the datasets are inevitable.

Part 1B: Interpolated hallucinations - "Educated" guesses

Even for data with no mistakes, hallucinations might still occur - especially if data is missing.

Imagine you need to solve a problem but lack a crucial piece of information. For example, you want to bake a chocolate cake, but you only know how to make a Victoria sponge and a chocolate muffin. You could use your existing experience and knowledge to try making the chocolate cake - the result might not be perfect, but it might be close to what you intended.

This process of filling in the gaps is called interpolation, and is intrinsically used by Neural Networks.

However, the more data is missing, the less nuance the AI is able to capture, so greater mistakes become possible.

Part 1C: Extrapolated hallucinations - Predicting outcomes

How confident would you feel about flying a spaceship to the International Space Station right now? Presumably not very confident, but only because this task is so far removed from your own experiences and skills. You can't simply "fill in the gaps" in your knowledge. But how would you feel if:

- You knew how to fly a plane?

- You also had experience with fighter jets?

- You also designed the spaceship?

At this point, you might feel a lot more confident about the mission. The more relevant data you have, the better your chances, though because the task is still so different to any other experience you've had, you are heading into the unknown no matter how well you prepare.

The concept of "going where no one has gone before" is analogous to the concept of extrapolating data. Unlike interpolation, you aren't "surrounded" by data when you extrapolate so it's not trivial to make an "educated guess". The idea is similar to predicting the future: you can use data from the past and present, but there's nothing from the future to anchor your forecast. As a result, predictions tend to become less reliable the further ahead you look.

Extrapolation errors also apply to Generative AI. If asked about something it has no specific data for, it will try its best though the response will likely be inadequate and inaccurate. This is why GenAI services undergo regular updates to stay updated with new events and concepts that didn't previously exist.

Part 2: Hallucinations due to the structure of the AI "brain"

Hallucinations aren't all about unreliable data - the structure and complexity of the AI "brain" is also important. As implied in the examples above, data can take on many different shapes and sizes, so we need an AI model that can be molded to fit the data.

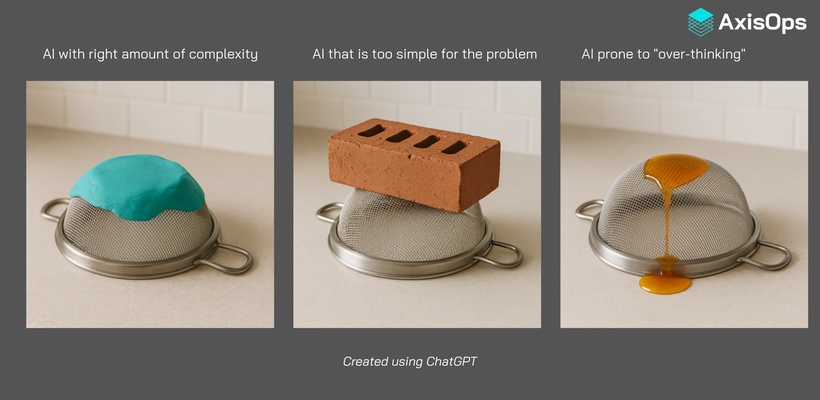

Let's imagine a sieve laying upside down on a table, and that this is the "data" we're trying to recreate. If the AI was made of playdough, then we could easily manipulate it to cover the sieve. When the playdough is removed, it preserves the shape of the sieve - we have created a "model" of our sieve using the playdough. AI needs to be similarly malleable, so that it can model the data used to train it.

If the AI was made of something solid like a brick, then we wouldn't be able to bend it to recreate the sieve's shape - I.E. the AI is unable to learn the shape of the sieve. In Machine Learning, this concept is called "under-fitting". The concept is similar to trying to teach your cat to speak English - cats' brains simply aren't designed to understand such complicated concepts.

If the AI was made of something too malleable, like honey, then it would seep through the holes of the sieve - I.E. the AI is too flexible for the task at hand. In Machine Learning, this concept is called "over-fitting". The concept is similar to over-thinking a situation. We've all been in situations where we are confronted with a problem and we over-think it. It then transpires the answer was the simplest solution possible. The same can happen with AI.

AI scientists and engineers have a tough job creating models with properties that are just right, despite sometimes having terabytes of data with unknown patterns and structures.

Part 3: Hallucinations by design

What if we told you that some Generative AI applications are designed to hallucinate?

Text generation AI typically works by taking a paragraph of text (for example, the prompt you have typed) and trying to predict the next word. Once that word has been chosen, it is added to the initial paragraph and fed into the AI once again to predict the next word after that. This is done again and again until the whole output has been generated. This is why text generation AI like ChatGPT and DeepSeek provide their outputs to your screen word-by-word, instead of providing the full output instantly.

The problem is, it's not always obvious what the next word in a sentence should be, and human language allows for versatility in how we communicate. For example, what is the next word in the sentence "The best city in the UK is..."? That would depend on your opinion. The AI doesn't have an opinion, but has been trained on thousands of datasets of people voicing their own opinions on which cities are good and bad. The AI essentially aggregates this data and gives each city a probability of being the next word. If "London" has the highest probability of 23%, will the AI always choose that? No - "London" will only be the chosen answer 23% of the time when you use that prompt. Therefore, if you wrote the same prompt again, there would be a 77% chance that the response would be different.

This design choice has been key to the success of text-generation AI. If the AI always chose the most probable next word, it would sound robotic and repetitive. Instead, by introducing an element of randomness, the AI will sound more creative and interesting like we do as humans. The drawback is that it can sometimes produce sentences that are improbable or inaccurate.

A good example is asking a GenAI model for the capital of South Africa, as it has three capitals, not one. Even a simple question like the capital of France can sometimes return "Versailles", since it held that role until 1789 and appears frequently in the data the AI has learned from.

It is therefore important to note that there is always a chance, no matter how small, that Generative AI will tell you something that isn't true - even if the the data used to train the AI had no mistakes and the AI "brain" was a perfect fit for that dataset.

The feedback loop of doom

We have talked about how conflicting and incorrect data can cause AI to say things that aren't correct or don't make sense, but what if things only get worse in the future?

Until recently, Generative AI models had the benefit of being trained on data (mostly) created by humans. However, the internet is now littered with AI-generated content, including the countless hallucinated mistakes that we've been talking about. With the differences between human-made and AI-made content harder to distinguish than ever, the next generation of AI will likely be trained on a huge amount of AI-generated data. Hallucinated content may therefore become treated as "fact", and it could become increasingly difficult to determine the truth and the origins of incorrect information.

The below video explains this issue further:

Will hallucinations ever go away?

There is arguably no single solution to fixing AI hallucinations:

- Data needs to be more-thoroughly reviewed (without human bias) before being used for AI

- Gaps in datasets need to be populated

- More research must be done to optimise how AI "brains" are structured

Incremental progress is happening and hallucination rates are slowly dropping, but OpenAI themselves have stated that "accuracy will never reach 100%".

Another part of the problem is that AI products are usually judged by overall accuracy rather than by how often they hallucinate. This drives companies to focus on maximising accuracy scores, even if it increases the risk of false outputs.

The saying "even a broken clock is right twice a day" is applicable here, because "broken" features are added to AI models that may boost accuracy by 1% but also increase the risk of hallucinations by 5%.

Fortunately, the tide is starting to turn as OpenAI and other AI providers are starting to pivot by sacrificing their accuracy scores and allowing their AI models to more readily say "I don't know" when the right answer isn't clear.

Mitigating hallucinations

What can you do?

The first step to protecting yourself from AI hallucinations is understanding how and why they happen, as we have sought to address in this article.

As we have seen, hallucinations are common. Here are some general tips to avoid negative consequences:

- When using GenAI, write prompts in a clear and structured way, leaving nothing to interpretation

- Approach outputs with skepticism and don't treat any AI response as absolutely true or correct

- Look for reliable sources that confirm the information provided; if there aren't any, it is probably made up!

- Challenge the AI (or other sources) for counter-points or alternative viewpoints for the given topic

AxisOps' approach

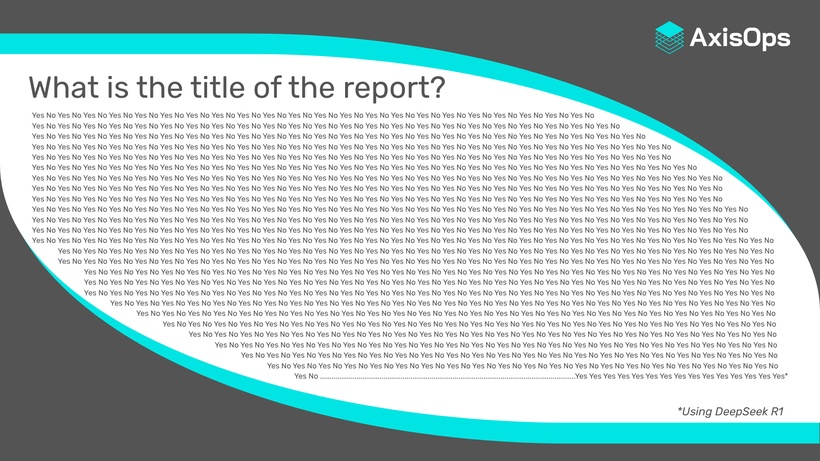

We aren't immune to AI hallucinations at AxisOps. One of our favourite examples of a hallucination came from a routine AI task to extract the title of a PDF we were analysing. Instead of returning the actual title, the GenAI product (DeepSeek R1) seemingly had a crisis for 30 minutes (response shown in the image below).

At AxisOps, we utilise a wide range of Machine Learning and AI solutions. We add layers of redundancy to reduce risk of hallucinations and help avoid unexpected behaviour.

For example, we use Generative AI to extract data from unstructured legal documents. To ensure that the AI response isn't hallucinated, we verify that the generated text appears in the original document. Moreover, we record each location where the text appears and compile this information into a report for a human to review. If the extracted data is not present in the document, then a human-in-the-loop is notified that the data may not be reliable.

Let's talk AI

Do you have big ideas for leveraging AI?

AxisOps are experts in the delivery of bespoke AI solutions, versatile Neural Networks and GenAI integration.

Start your AI journey